In this issue, we’d like to cover some of the best resources we’ve found on the topic of natural language.

Whether it be natural language understanding, processing, or generation, here are a few articles we’ve been reading this week that should bring some clarity around this particular subset of AI.

1) AI in Your Workday: A Quick Guide to Natural Language Processing

By Alex Delong, Product Marketer at GoToMeeting

Believe it or not, Natural Language Processing (NLP) is one of the most commonly used applications of AI. Have you ever stopped to think how these machines are able to understand us when we do not speak Python, Java, or C++?

In this quick guide to NLP, Alex Delong unravels some of the mystery behind this technology. You’ll get a better understanding of why customer service is improving and why science seems to be getting smarter.

“…New technologies and AI will continue to fulfill the simple and mundane tasks for people, giving them the time back in their day to do what they do best – brainstorming, collaborating, and innovating…”

Check out the full article here.

2) A Review of the Recent History of Natural Language Processing

By Sebastian Ruder, Research Scientist at Aylien

This incredibly in-depth blog series expands on Herman Kamper and Sebastian Ruder’s session, “The Frontiers of Natural Language Processing” given at the Deep Learning Indaba 2018 event.

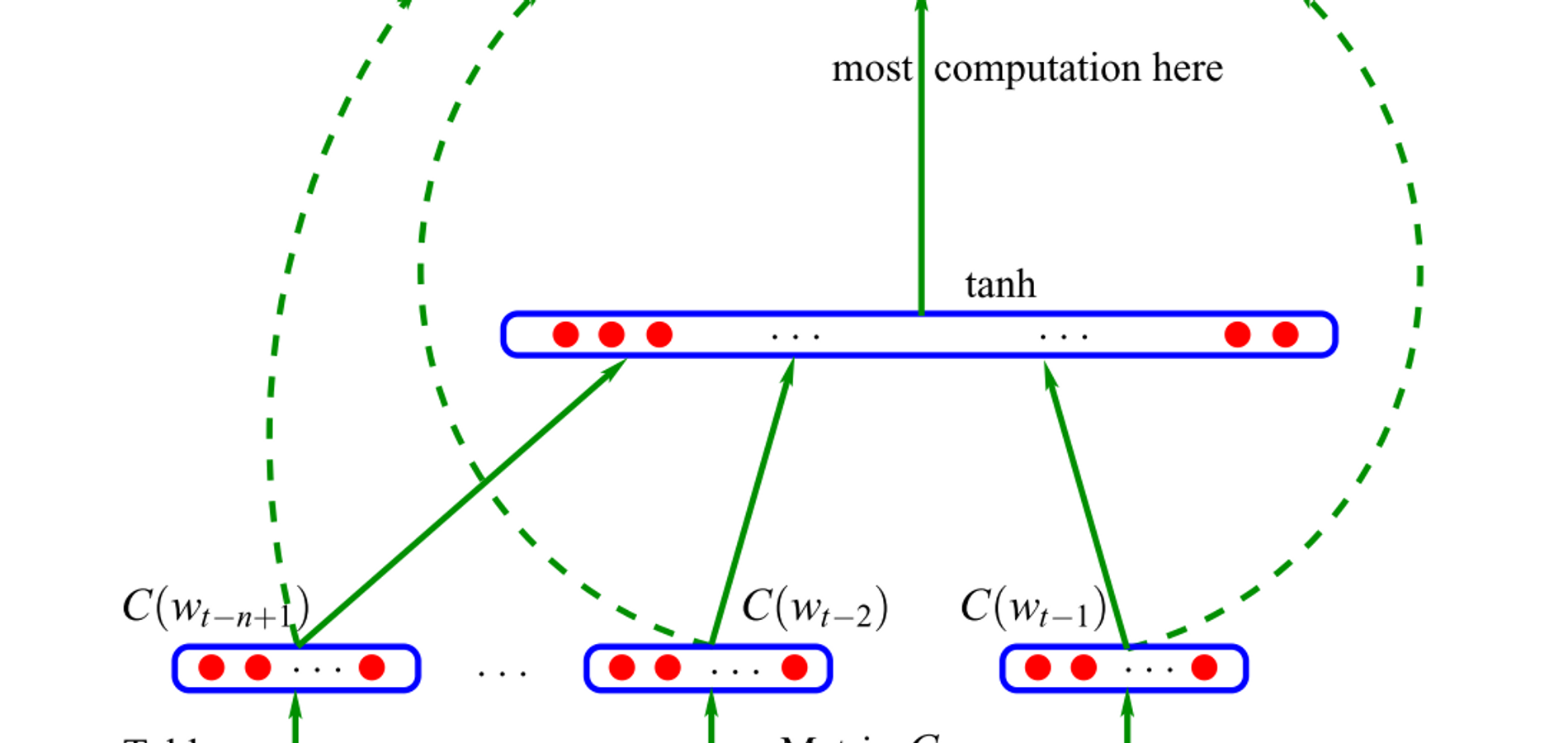

The post covers major advances in NLP from 2001-2018, focusing on neural network-based methods. The second post will discuss open problems in NLP, so keep an eye out for part 2 in the next few months.

“…Due to the flexibility of sequence-to-sequence models, it is now the go-to framework for natural language generation tasks, with different models taking on the role of the encoder and the decoder.

Importantly, the decoder model can not only be conditioned on a sequence, but on arbitrary representations. This enables the system to generate a caption based on an image, text based on a table, and a description based on source code changes, for instance…”

Read the full article here.

3) Building Safe Artificial Intelligence: Specification, Robustness, and Assurance

By Pedro A. Ortega, Vishal Maini, and the DeepMind safety team

If artificial intelligence (AI) is a rocket, then we will all have tickets on board some day. And, as in rockets, safety is a crucial part of building AI systems. Guaranteeing safety requires carefully designing a system from the ground up to ensure the various components work together as intended, while developing all the instruments necessary to oversee the successful operation of the system after deployment.

“…We are building the foundations of a technology which will be used for many important applications in the future. It is worth bearing in mind that design decisions which are not safety-critical at the time of deployment can still have a large impact when the technology becomes widely used.

Although convenient at the time, once these design choices have been irreversibly integrated into important systems the tradeoffs look different, and we may find they cause problems that are hard to fix without a complete redesign…”

Read the full article here.

4) Complete Guide to Word Embeddings

by George-Bogden Ivanov, Founder of Textera.ai

This guide covers some of the most popular algorithms used to explore word embeddings, neural networks, deep learning, and classification. From establishing synonyms to automating feature extraction, this guide will show you how each model performs.

“…Ok, so what are word vectors (or embeddings)? They are vectorized representations of words. In order for the representation to be useful (to mean something) some empirical conditions should be satisfied:

- Similar words should be closer to each other in the vector space

- Allow word analogies: “King” – “Man” + “Queen” = “Woman”

In fact, word analogies are so popular that they’re one of the best ways to check if the word embeddings have been computed correctly…”

Read the full article here.

Let us know what you’re reading on Twitter @LuminosoTech